The new generation data lake architecture has evolved to massively scale to hundreds of terabytes with acceptable read/write speeds. It’s the next-gen cloud petabyte data lake architecture you cannot afford to miss.

The new generation data lake helps work through massive volumes of data used for Machine Learning projects which are relentlessly growing. Data scientists and data engineers have turned to Data Lakes to store very large volumes of data and find meaningful insights. Data Lake architectures have evolved over the years to massively scale to hundreds of terabytes with acceptable read/write speeds. But most Data Lakes, whether open-source or proprietary, have hit the petabyte scale performance/cost wall.

Scaling to petabytes with fast query speeds requires a new architecture. Fortunately, the new open-source petabyte architecture is here. The critical ingredient comes in the form of new table formats offered by open source solutions like Apache Hudi™, Delta Lake™, and Apache Iceberg™. These components enable Data Lakes to scale to the petabytes with brilliant speeds.

To better recognize how these new table formats come to the rescue in the new generation data lake, we need to understand which components of the current Data Lake architectures scale well and which ones do not scale as well. Unfortunately, when a single piece fails to scale, it becomes a bottleneck and prevents the entire Data Lake to scale to the petabytes efficiently.

We will focus on the open-source Data Lake ecosystem to better understand which components scale well and which ones can prevent a Data Lake from scaling to the petabytes. We will then see how Iceberg can help us massively scale. The lessons learned here can be applied to proprietary Data Lakes.

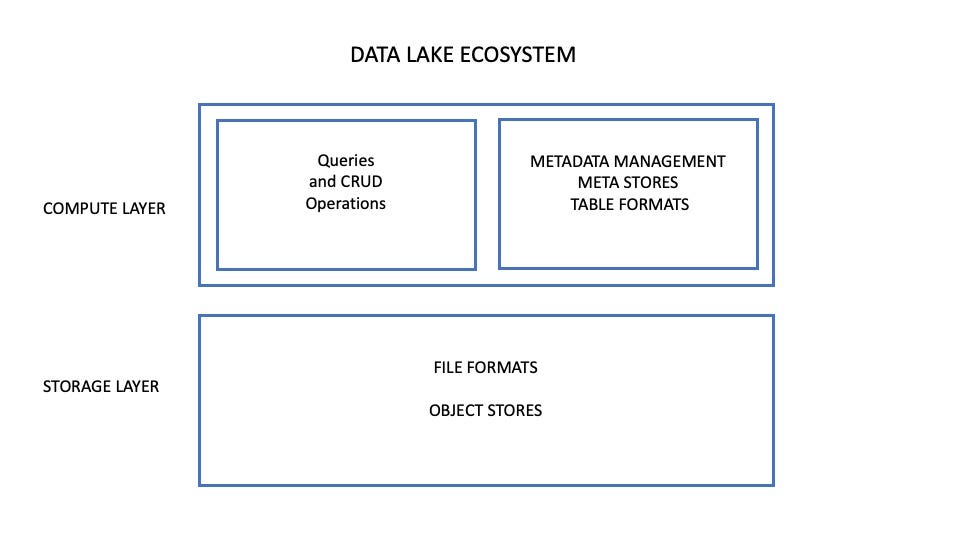

The Data Lake Architecture

As shown in the diagram below, there are two main layers to a typical Data Lake architecture. The storage layer is where the data lives, and the compute layer is where the compute and analytical operations are executed.

Object Stores and File Formats — scalable

Data Lake storage in the new generation data lake is handled by object stores. We can massively scale object stores by simply adding more servers. These containers span across different servers, making objects stores extremely scalable, resilient, as well as (almost) fail-safe.

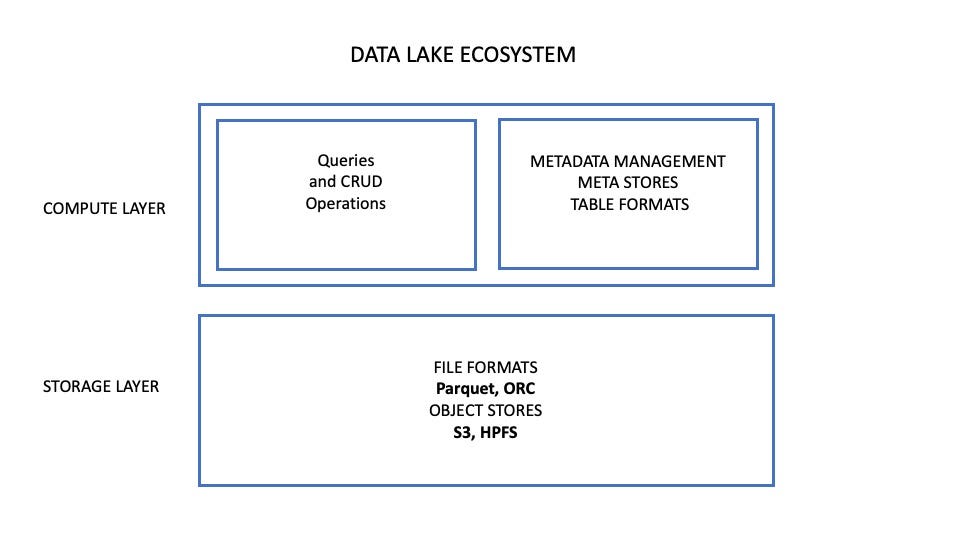

Today, the most popular Object Stores are Amazon S3™ (Simple Storage Services) offered by Amazon Web Services™ and the Hadoop Distributed File System (HDFS)™. A flat, massively scalable distributed horizontal architecture is used to localize these containers and the objects in them. Of course, you can find very similar services on GCP, Azure.

Apache Parquet™ and Apache ORC™ are commonly used file formats. They use columnar-storage file formats, which scales a lot better than row-based file formats for analytics usage. These file management systems read only the needed columns during read/write operations, which significantly speeds up the read/writes.

The storage layer with the object stores and file format systems described above looks like this.

Data Processing — scalable

The compute layer manages all execution commands, which includes Creating Reading Updating Deleting (CRUD) and performing advanced queries and analytical computations. In the new generation data lake, it also carries the meta store, which contains and manages information such as the metadata and the file locations, and other information required to be updated in a transactional way.

Apache Spark™ is one of the more popular open-source data processing frameworks, as it can handle large-scale data sets with ease. Experiments have shown Spark’s processing speed to be 100x faster than Hadoop. Sparkachieves its scalability and speed by caching data, running computations in memory, and executing multi-threaded parallel operations. The Spark APIs allow many components of the open-source Data Lake to work with Spark.

PrestoSQL™, which is now rebranded as Trino™, is a distributed SQL query engine designed for fast analytics on large data sets. Trino was initially developed by Facebook in 2013. It can access and query data from several different data sources with a single query and execute joins from tables that are in separate storage systems like Hadoop and S3. It uses a coordinator to manage a bunch of workers running on a cluster of machines.

Presto does not have its own meta store. The Presto coordinator needs to call a meta store to know in which container the files are stored. It generates a query plan before executing it to the different nodes. Although efficient, the Presto coordinator represents a single point of failure and bottleneck due to its architecture.

Although both Spark and Presto are used as an SQL interface to the new generation data lake, they are not used for the same purpose. Presto was designed to create and handle large queries of big datasets. It is used by data scientists and data analysts to explore large amounts of data. Spark, on the other hand, is primarily used by data engineers for data preparation, processing, and transformation. Since their purposes are not the same, they often both coexist in Data Lake environments.

This blog post is an excerpt an article posted by Paul Sinaï on Towards Data Science. Continue reading the full article.

For more articles on cloud infrastructure, data, analytics, machine learning, and data science, follow me on Towards Data Science.

Get started with ForePaaS for FREE!

Discover how to make your journey towards successful ML/ Analytics – Painless

The image used in this post is a royalty free image from Unsplash.